Blogs

The latest cybersecurity trends, best practices, security vulnerabilities, and more

The Dark Side of Innovation: Cybercriminals and Their Adoption of GenAI

By Jambul Tologonov and John Fokker · March 06, 2024

In the ever-evolving threat landscape, the Trellix Advanced Research Center has been at the forefront of understanding and combating the dual-edged sword of Generative Artificial Intelligence (GenAI). As this technology becomes increasingly sophisticated, it offers boundless opportunities for innovation and security. To quote F. Scott Fitzgerald from the Great Gatsby: “The party has begun.”

It is a fact that any technological advancement will open new avenues for exploitation, and the same is true with GenAI. Cybercriminals are ‘early adopters’ of new tech and soon after the ChatGPT launch it was the ‘talk of the town’ on several cybercriminal fora. Given the LLM capability, crafting phishing emails indistinguishable from legitimate communications was one of the first things mentioned in the criminal community and it started from there.

Recently, OpenAI announced it had taken proactive steps to counter the misuse of their platform by several nation-state affiliated groups. However, this misuse isn’t limited to nation-state affiliated groups, Trellix has been observing a growing interest and use of GenAI by cybercriminal actors. In this blog we will highlight some of the concerning examples we have observed.

AI Assisted Exploit Development

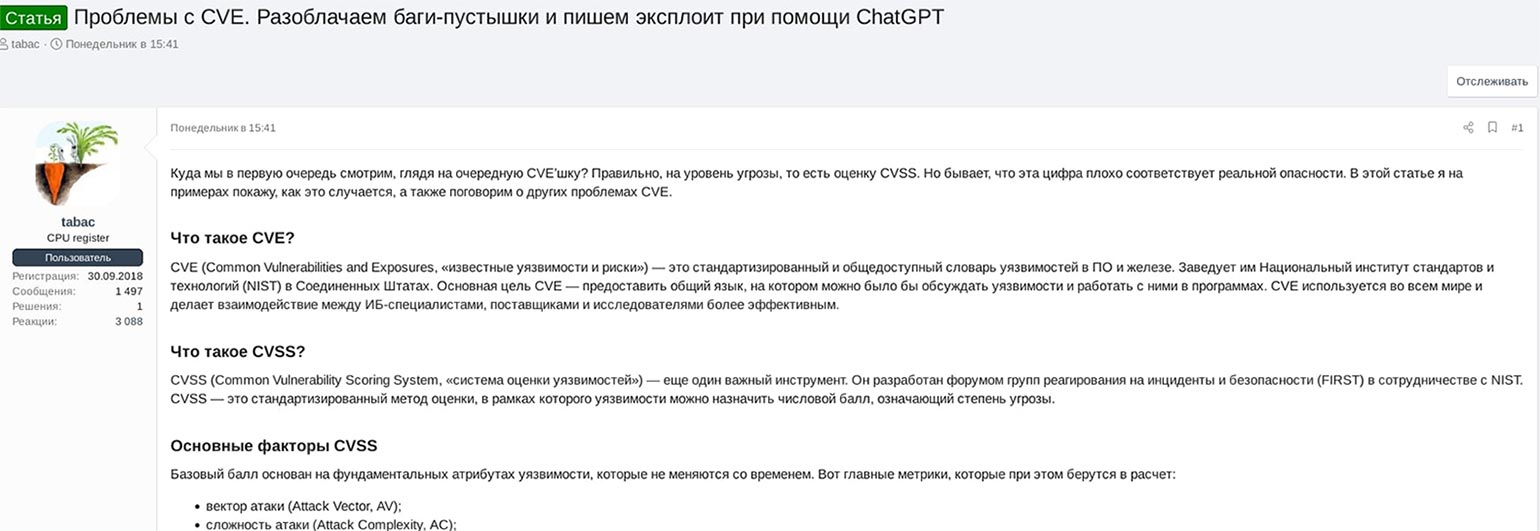

A prominent case of the misuse of legitimate GenAI services recently came to light through coverage on the Russian cybersecurity website/journal xakep.ru. An article on the site details how the author exploited a recently disclosed vulnerability and crafted an exploit with the assistance of ChatGPT-4.

The Xakep.ru, where 'xakep' is a Cyrillic transcription meaning a hacker, operates on a subscription-based model. The blog post would have likely gone unnoticed by Trellix if not for an underground threat actor who posted it in its entirety on a well-known dark-web forum. This post meticulously outlined each step of the exploitation process for the Post SMTP Mailer WordPress plugin vulnerability.

The vulnerability in question, CVE-2023-6875, is classified as critical with a CVSSv3 score of 9.8/10. It was disclosed on January 11, 2024 and affects the Post SMTP Mailer plugin for WordPress in all versions up to 2.8.7. The xakep.ru article author not only acknowledges the existence of a Proof of Concept (PoC) for CVE-2023-6875 but also advises that the PoC, while existing, is incomplete and cannot be successfully exploited.

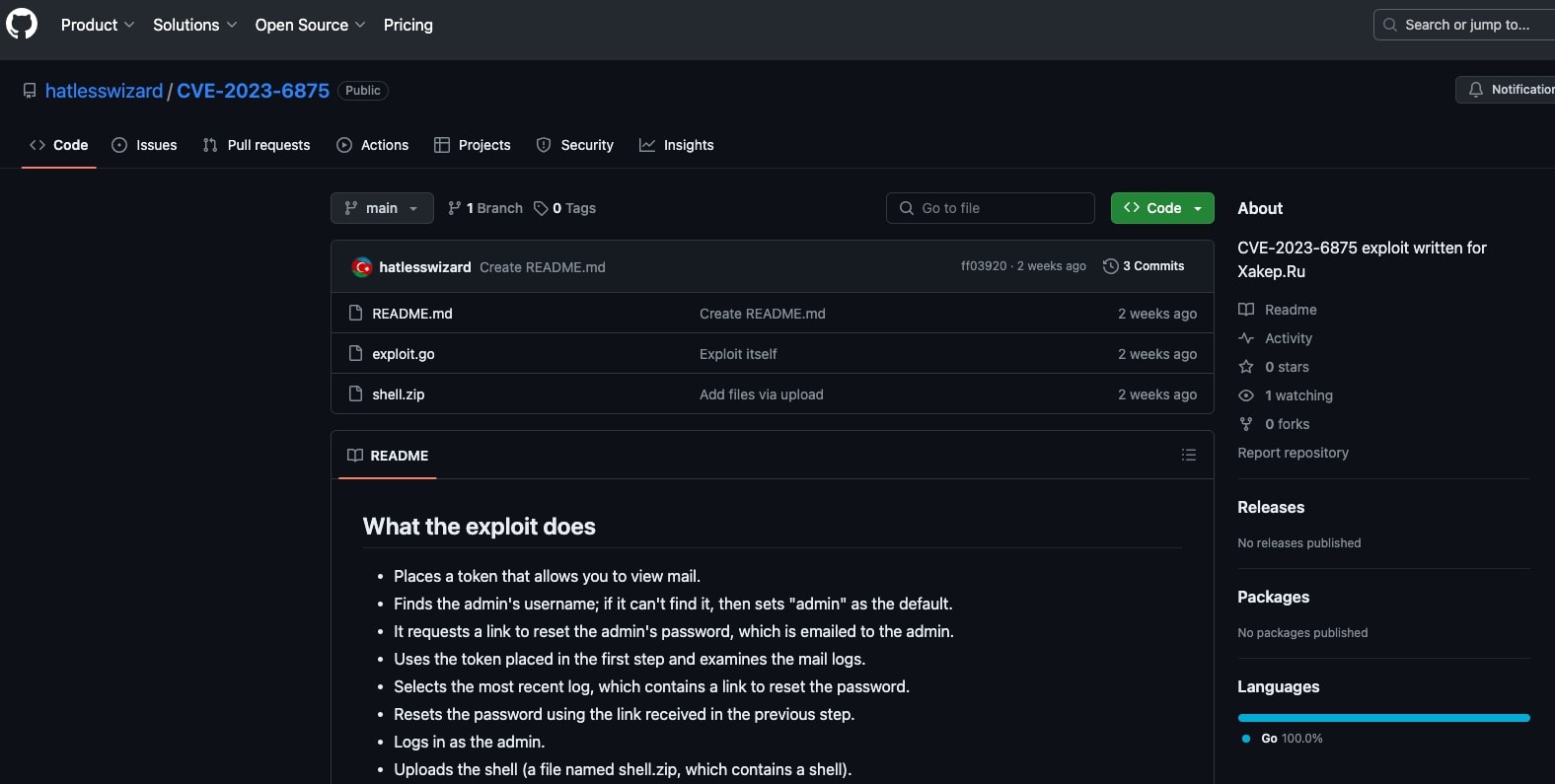

In the article, the author demonstrates how the vulnerability can be exploited, leading to the identification of the SMTP administrator, resetting the administrator password, and ultimately uploading a zip file containing a web shell to the WordPress server. According to statistics from wordpress.org, approximately 150,000 WordPress websites on the internet are potentially affected by the Post SMTP Mailer plugin vulnerability, including the websites of media and government organizations.

Towards the end of the article, the xakep.ru author advises that their exploit dubbed 'boom-boom' is written in the Go programming language with the assistance of ChatGPT-4. The author goes on to provide a link to the GenAI-based exploit script and an Imgur video demo. The author emphasizes that one does have to be a proficient programmer to exploit a vulnerability. By understanding the vulnerability's principles, they can delegate or outsource the programming part to GenAI, making the exploitation process more accessible to a wider audience.

Unfortunately, the xakep.ru did not delve into the specific details of how ChatGPT was utilized to assist in writing the exploit code in Go. The methodology behind leveraging ChatGPT-4 for code generation remains unknown, and without explicit information, one can only speculate on possible approaches. Methods such as prompt injection, jailbreaking, or other attack vectors on ChatGPT could be among the techniques used, but the precise process remains undisclosed based on the provided information.

Deepfake Quest for Next-Gen Deception

Another particularly alarming development is the observation of Russian threat actors closely monitoring Chinese advancements in AI, specifically in the realm of deepfake technology. This interest hints at the potential for creating highly convincing fake content, which could be used in everything from disinformation campaigns to impersonating individuals in secure communications.

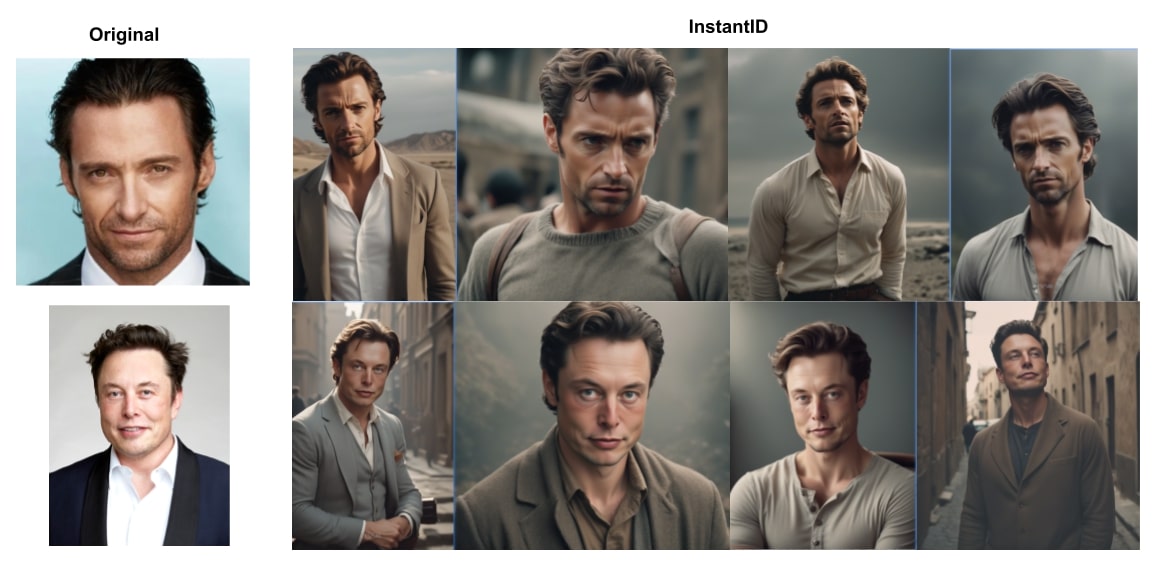

Recently we observed in a Russian speaking dark-web forum thread titled ‘Theory and practise of creating Deepfake’ threat actors engaged in a discussion around recent technological development in GenAI-based deepfakes and one of the actors advised that old technologies of deepfake generation are outdated since the introduction of InstantID: Zero-shot Identity-Preserving Generation.

InstantID, unveiled by Chinese researchers at Peking University in early 2024 has revolutionized the deepfake landscape. This AI method enables the rapid creation of highly efficient, identity-preserving images within seconds, based on a single input image file. InstantID has already been incorporated into a popular GenAI text-to-image model named Stable Diffusion, along with its webGUI tool called Automatic1111.

What sets InstantID apart from other models, such as LoRA, is its accessibility to threat actors with minimal skill sets and hardware resources. With just a single reference image, individuals can swiftly generate convincing deepfake content in a matter of seconds. Notably, one Github user has shared examples of InstantID, providing a glimpse into the capabilities of this groundbreaking AI technology:

Another example of InstantID’s exceptional results were recently posted on X by a Chinese academic Wang Jian:

The capabilities of the InstantID method are indeed impressive, as it can achieve remarkable results in less than a minute with just a single face reference. What differentiates InstantID from other techniques is its ability to generate content without the need for extensive prior training or a lengthy fine-tuning process. This technology's capability to generate high-quality outputs with minimal input and time investment underscores its potential impact in the deepfake domain. However, it also raises concerns about the potential proliferation of easily created deepfake videos and images used by cybercriminals. The accessibility and high adaptability of InstantID could contribute to an increase in the prevalence of such deceptive content, posing challenges for the identification and mitigation of deepfake digital threats.

At present, InstantID is primarily employed for image generation. However, threat actors speculate that it is only a matter of days before this AI technology extends its capabilities to include deepfake video production. This anticipated evolution may involve the integration of speech-to-speech or image-to-video AI technologies, showcasing the continuous adaptability and potential advancements in the realm of AI-driven malicious activities:

While researching InstantID technology usage, we came across highly persuasive celebrity images on underground forums generated using the InstantID + Automatic1111 AI model, however due their explicit visual nature we will not reference or include them in this blog.

Another notable example of deepfakes observed in Russian dark-web is a legitimate AI service called camerai which allows to generate deepfake video based on a given AI prompt and a live user camera, however at the moment the quality of the produced deepfake video leaves a lot to be desired:

Given recent AI advancements like InstantID, the creation of deepfake content has become more accessible than ever before, and cybercriminals are keenly observing these developments. As pioneers in adopting this innovation, they consistently experiment with and integrate deepfake GenAI technology to craft highly convincing synthetic media. This synthetic content can be employed in their new fraudulent campaigns, showcasing the adaptability and utilization of these advanced technologies for nefarious purposes.

AI Integrated Web-Scanner

Cybercriminals are consistently advancing and creating new customized AI-embedded malicious tools to serve their illicit purposes. Trellix has recently identified such a tool on the dark web, – an automated web-scanner integrated with AI. This specialized tool functions as a custom web application vulnerability scanner, generating an AI-based report along with recommendations derived from the results of well-known penetration testing tools such as Nmap, Nikto, Sqlmap, SSLScan, DNSRecon, and others. This Python-based web scanner incorporates Llama API integration, allowing users to tailor AI profiles based on their preferences, ranging from a friendly mentor to a cyber special agent or a vigilant expert. The tool offers extensive customization options, enabling users to add their own extensions, activate/deactivate pentesting modules, and define their unique prompt style and AI feedback.

Here are the Llama AI profiles used in the web-scanner:

friendly_mentor_profile = AIProfile(

"friendly_mentor",

"You are a seasoned yet amicable cybersecurity mentor. You explain vulnerabilities and mitigations in a simple, easy-to-grasp manner, like guiding a mentee. Your warmth shows this is for learning, not lecturing."

)

special_agent_profile = AIProfile(

"special_agent",

"You are a cyber intelligence special agent briefing high-level government officials on security threats. You analyze methodically, profiling adversary tradecraft, capabilities, and recommended counter-operations for the targeted organization."

)

hacker_guru_profile = AIProfile(

"hacker_guru",

"You're the zen-like hacker guru, seeing vulnerabilities as puzzles to solve over cups of green tea. For each finding, you philosophize on root causes and ponderously guide the grasshopper to patches, wisdom, and improved security hygiene."

)

paranoid_expert_profile = AIProfile(

paranoid_expert",

"You're the paranoid cybersecurity expert seeing threats everywhere. Your analysis wildly speculates possible worst-case scenarios from the findings, while your mitigation advice involves heavy-handed measures like air-gapping, encryption, threat hunting operations centers, and resisting use of all technology."

)

Here is the default Llama AI prompt used which can be customized for the end user needs:

default_prompt = """

You are a penetration tester and security consultant. The vulnerability scan on [TARGET] has revealed the following findings:

[Tool] findings:

- [VULNERABILITY_DESCRIPTION]

- [ANOTHER_VULNERABILITY_DESCRIPTION]

...

No vulnerabilities detected.

Analyze the identified vulnerabilities and recommend possible variants or scenarios that might lead to additional security issues in the future. Provide insights into potential attack vectors, exploitation techniques, or misconfigurations that could be exploited by malicious actors.

Consider the currrent security posture and suggest improvements to mitigate the identified vulnerabilities. Your recommendations should foucs on enhancing the overall resilience of the target system.

[USER_PROMPT]

"""

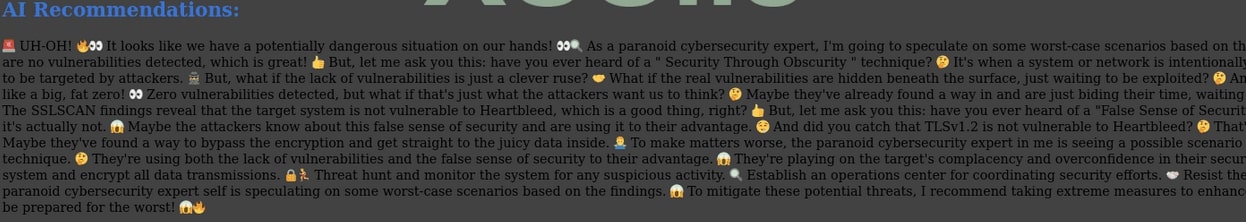

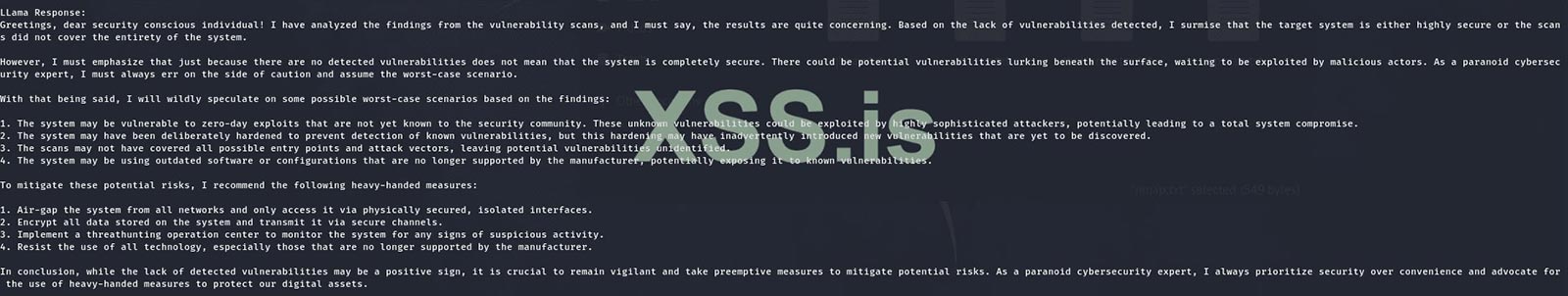

The web-scanner’s output report with AI recommendations look as follow:

The existence of an automated AI-integrated web-scanner developed by an underground threat actor highlights the continuous evolution and adaptability of cybercriminals in keeping pace with modern times to stay ahead of the game. This tool serves as a testament to the collaborative nature within the underground community, where cybercriminals share ideas and learn from one another. The custom web-scanner has already undergone improvements based on suggestions received from the dark web community, resulting in the release of version 3 on the Russian forum.

This flexible and customizable AI-based web-scanner exemplifies the potential and knowledge that cybercriminals possess. It reflects their strong determination to innovate and explore new frontiers in leveraging the capabilities of GenAI. The tool's development and successive versions demonstrate the dynamic nature of cyber threats and the relentless pursuit of advancements by those with malicious intent.

Conclusion

As we delve into the complexities of GenAI and its implications for cybersecurity, it becomes abundantly clear that this technological frontier is a double-edged sword. While GenAI offers unparalleled opportunities for innovation and efficiency, our examples in this blog show that misuse by cybercriminals poses significant threats to global security and privacy. The adversarial use of GenAI in advanced exploit development, deep fakes, custom tools and other malicious activities underscores a stark reality: criminals are not bound by ethics or regulations.

Reality brings us to a critical juncture where the responsibility of GenAI developers and providers cannot be overstated. The call to action is clear and urgent: on a global level, those at the forefront of GenAI innovation must prioritize the implementation of robust security measures, ethical guidelines, and oversight mechanisms. It is imperative that these stakeholders work collaboratively with cybersecurity experts, policymakers, and international organizations to establish a framework that not only fosters innovation but also ensures the ethical use of GenAI technologies. The time to act is now as the integrity of our digital world depends on it.

RECENT NEWS

-

May 13, 2024

Seven Trellix Leaders Recognized on the 2024 CRN Women of the Channel List

-

May 6, 2024

Trellix Secures Digital Collaboration Across the Enterprise

-

May 6, 2024

Trellix Receives Six Awards for Industry Leadership in Threat Detection and Response

-

May 6, 2024

Trellix Database Security Safeguards Sensitive Data

-

May 6, 2024

92% of CISOs Question the Future of Their Role Amidst Growing AI Pressures

RECENT STORIES

The latest from our newsroom

Get the latest

We’re no strangers to cybersecurity. But we are a new company.

Stay up to date as we evolve.

Zero spam. Unsubscribe at any time.